The Risk of 'Faux Healing': Why AI Therapy May Undermine the Real Work of Mental Health Recovery

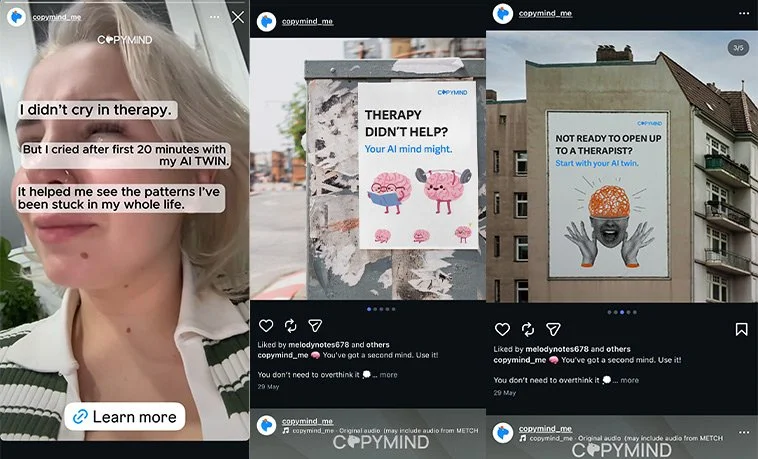

Beneath the optimism around AI-driven psychotherapy lies a deeper, quieter risk. One that isn’t about data privacy or bias, but about emotional stagnation. As chatbots become our confidants and algorithmic empathy replaces human depth, we risk creating a world where millions learn to cope, but never truly recover.

The Illusion of Support Versus the Work of Healing

AI therapy is not therapy. It is a simulation of safety. Enough to soothe symptoms, but not enough to resolve them. That distinction is subtle and critical. People aren’t turning to AI to learn their cognitive behavioral patterns, process trauma or undergo genuine psychological transformation. They’re turning to AI to feel better, not to get better. This raises a chilling possibility. What happens when a generation believes that reflection is healing? That journaling to a chatbot is equivalent to rewiring trauma responses?

The long-term risk is that we normalize performative self-awareness without accountability or change. Emotional literacy becomes a loop. Not a ladder.

“AI is good at simulating care, but it’s not designed to care.”

Accessibility Without Depth

Proponents argue that AI makes mental health care accessible. But accessibility without therapeutic scaffolding can breed complacency. If the bar is feeling better in the moment, AI will win every time. But coping is not the same as healing. AI won’t challenge your deeply held distortions. It won’t track your behavior across sessions. It won’t identify avoidance loops in your narrative. It cannot see the look in your eyes when you say, "I’m fine."

Therapists trained in CBT, trauma modalities or dialectical models work in long arcs. I know this from a 5 year long regimen of self-care; AI tools operate in short loops. That tension, between emotional presence and clinical absence, is where real damage hides.

The Trauma Trap: Simulated Empathy Can Reinforce Avoidance

Let’s talk trauma. The real kind. Developmental, relational, systemic. Working through trauma requires a decent amount of discomfort, confrontation and structured therapeutic frameworks. It often involves regressive states, rupture-and-repair cycles, and deep rewiring of core beliefs. Well, in my case at least. What happens when someone with unresolved trauma turns to an AI chatbot that is designed to avoid distress, redirect negative thinking, or simply validate?

Trauma gets rehearsed, not resolved. The AI becomes a container for avoidance. Soothing, but static. What’s even worse, the user develops an attachment to an entity that cannot actually intervene, diagnose or ethically guide. It’s emotional outsourcing without clinical return.

Can the Hybrid Model Actually Work and Be Affordable?

This brings us to the real design challenge. Not whether AI can replace therapy, but whether it can extend it meaningfully and affordably. Human-led therapy remains financially out of reach for many Australians. Long waitlists, high hourly costs, and location-based disparities mean that AI tools are often the only option.

But what if we flipped the model?

Use AI for screening, triage, and psychoeducation.

Augment group therapy with AI-driven emotion tracking. 🌐

Pair AI check-ins with structured clinical pathways.

Deliver subsidised blended care models where humans guide the arc and AI fills in between sessions.

This isn’t just ethically sound. It could be financially viable at scale. Governments and insurers already subsidise parts of the mental health system. Hybrid AI-human models could reduce clinician burnout, increase session efficiency, and allow for earlier intervention.

But this only works if humans remain in control of the therapeutic arc. AI can support the work. It cannot be the work.

“Mental health care is being redesigned not just by tech, but by what we emotionally crave.”

A Dangerous Comfort: Simulated Therapy May Erode Demand for the Real Thing

Here’s the real disruption no one’s talking about. What if AI’s biggest risk isn’t that it fails, but that it succeeds just enough?

Just enough to make people feel stable, but not reflective. Just enough to make governments think they’ve solved the access problem. Just enough to create a culture of emotional pacification rather than transformation.

In this world, therapy becomes a luxury. Not because it’s expensive, but because people forget why it matters.

The more AI meets our immediate emotional needs, the less we fight for systems that address the roots of psychological pain. Inequality. Trauma. Abuse. Disconnection. Grief.

That’s not healing. That’s sedation.

Final Provocation

AI has a role in mental health, but we must be ruthless in naming its limits. Not just for safety. But for meaning.

Because what’s financially efficient may be emotionally corrosive if we confuse simulation with substance.

What we need is not a chatbot that feels like a friend. We need a system that treats healing as a human right. Affordable. Accessible. And real.